Introduction:

In part 1 we talked about Caching introduction and some terminologies of caching and in part 2 and part 3 we have seen some implementation of the famous replacement cache algorithms and in part 4 we saw comparisons between some famous caching frameworks and in this part we are going to continue what we started in part 4 and as in part 4 we will concentrate only on memory caching.

The Task:

After programmer 1 released the caching article in “Programming Mania” the geek to geek magazine, he got a lot of threaten mails and terrible messages from caching geeks defending their beloved caching frameworks and warning him if he didn’t make their beloved caching framework win the contest, he will regret the day he became a programmer.

That didn’t scare our programmer and he went on completing the second part of the comparison

. ShiftOne

· WhirlyCache

· SwarmCache

· JBoss Cache

ShiftOne:

ShiftOne or as they call JOCache is a lightweight caching framework that implements several strict object caching policies which comes up with a set of cache algorithm implementations that supports in memory cache.

ShiftOne cache forces two rules for every cache:

- Max Size - each cache has a hard limit on the number of elements it will contain. When this limit is exceeded, the least valuable element is evicted. This happens immediately, on the same thread. This prevents the cache from growing uncontrollably

- Element Timeout - each cache has a maximum time that it's elements are considered valid. No element will ever be returned that exceeds this time limit. This ensures a predictable data freshness.

ShiftOne use decorator pattern in order to make it more flexible for the user to use any underneath caching product to maintain the cache.

The following caching products can be plugged into ShiftOne:

- EHCache

- SwarmCache

- JCS Cache

- Oro Cache

ShiftOne enables client to gather statistics (Hit/Miss) about the cache by using JMX, not only that but also enables integration with Hibernate ORM through adaptors.

When it comes to in memory caching (which is the only thing JOcache supports) JOCache uses Soft references for the caching entries.

JOCache was originally implemented as part of the ExQ project to support ResultSet caching. It was later split out for use by other projects. It was designed to cache large expensive database query results.

-Memory Cache:

ShiftOne cache supports LRU, LFU, FIFO, Single, Zero

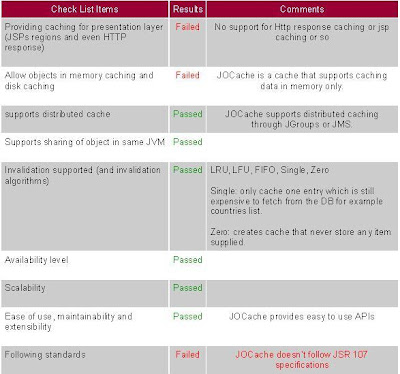

ShiftOne and Check List:

WhirlyCache:

WhirlyCache is a fast, configurable in-memory object cache for Java. It can be used to speed up a website or an application by caching objects that would otherwise have to be created by querying a database or by another expensive procedure it also provides an in-memory cache.

WhirlyCache runs a separate thread to prune the cache; in other words, the data from the cache is not provided by the same application thread that the client uses. Thus, there are fewer burdens on the application thread.

Whirlycache is built around several design principles that differ from other cache implementations:

- Require synchronization as infrequently as possible

- Do as little as possible in the insertion and retrieval operations

- Soft limits are acceptable for many applications

- Disk overflow becomes a bad idea very quickly

Many attributes of Whirlycache are configurable in an XML file, but the most important components of the cache are the Backend, the Tuner, and the Policy.

WhirlyCache support pluggable backend implementations that need to implement the ManagedCache interface (which is a subinterface of java.util.Map, although not all the methods of Map need to be implemented). WhirlyCache currently support two backends: ConcurrentHashMap and FastHashMap. You can even implement your own backed by implementing the ManagedCache interface.

The Tuner is a background thread that performs cache maintenance activities specified in the configured Policy implementation. One Tuner thread per cache is created and it is configured to run every n seconds. It depends on your application, but you definitely don't want to run the Tuner too often since it will only serve to burden the system unnecessarily.

-Memory Cache:

Currently, WhirlyCache offers FIFO, LFU and LRU. You can specify a different Policy implementation per named cache in the whirlycache.xml configuration file

WhirlyCache and Check List:

SwarmCache is an in-memory cache intended more for caching domain objects on the data access layer. It offers support for a distributed cache in a clustered environment.

SwarmCache supports the LRU caching algorithm. However, SwarmCache is essentially an in-memory cache. When LRU is set as the caching algorithm and the memory capacity is reached, SwarmCache evicts the memory objects as per LRU logic from its memory.

SwarmCache uses soft references to the cached objects. So, if the LRU is not set as the caching algorithm, it relies on the garbage collector to swipe through its memory and clean objects that are least frequently accessed. However, SwarmCache recommends a combination of the above two to be set as the caching algorithm.

SwarmCache provides a wrapper in order to be used with Hibernate ORM and DataNucleus

When used in clustering environment each server instantiates its own manager. For each type of object that the server wishes to cache, it instantiates a cache and adds it to the manager. The manager joins a multicast group and communicates with other managers in the group. Whenever an object is removed from a cache, the manager notifies all other managers in the group. Those managers then ensure that the object is removed from their respective caches. The result is that a server will not have in its cache a stale version of an object that has been updated or deleted on another server.

Note that the managers only need to communicate when an object is removed from a cache. This only happens when an object is updated or deleted. The managers do not co-operate beyond this. This means that the amount of inter-server communications is proportional to the amount of updates/deletes of the application. Also notice that there is no "server"; all hosts are equal peers and they can come and go from the cache group as they please without affecting other group members. Thus the operation of the distributed cache is very robust

-Memory Cache:

LRU, Timeout, Automatic and Hybrid

SwarmCache and Check List:

JBoss Cache:

JBoss offers two kinds of cache flavors, namely CoreCache and PojoCache.

- JBoss Core Cache is a tree-structured, clustered, transactional cache. It can be used in a standalone, non-clustered environment, to cache frequently accessed data in memory thereby removing data retrieval or calculation bottlenecks while providing "enterprise" features such as JTA compatibility, eviction and persistence.

JBoss Cache is also a clustered cache, and can be used in a cluster to replicate state providing a high degree of failover. A variety of replication modes are supported, including invalidation and buddy replication, and network communications can either be synchronous or asynchronous.

JBoss Cache can - and often is - used outside of JBoss AS, in other Java EE environments such as Spring, Tomcat, Glassfish, BEA WebLogic, IBM WebSphere, and even in standalone Java programs thanks to its minimal dependency set

- POJO Cache is an extension of the core JBoss Cache API. POJO Cache offers additional functionality such as: maintaining object references even after replication or persistence.

fine grained replication, where only modified object fields are replicated.

"API-less" clustering model where POJOs are simply annotated as being clustered.

In addition, JBoss Cache offers a rich set of enterprise-class features:

being able to participate in JTA transactions (works with most Java EE compliant transaction managers).

Attach to JMX consoles and provide runtime statistics on the state of the cache.

Allow client code to attach listeners and receive notifications on cache events.

Allow grouping of cache operations into batches, for efficient replication

The cache is organized as a tree, with a single root. Each node in the tree essentially contains a map, which acts as a store for key/value pairs. The only requirement placed on objects that are cached is that they implement java.io.Serializable.

JBoss Cache works out of the box with most popular transaction managers, and even provides an API where custom transaction manager lookups can be written.

The cache is completely thread-safe. It employs multi-versioned concurrency control (MVCC) to ensure thread safety between readers and writers, while maintaining a high degree of concurrency. The specific MVCC implementation used in JBoss Cache allows for reader threads to be completely free of locks and synchronized blocks, ensuring a very high degree of performance for read-heavy applications. It also uses custom, highly performant lock implementations that employ modern compare-and-swap techniques for writer threads, which are tuned to multi-core CPU architectures.

Multi-versioned concurrency control (MVCC) is the default locking scheme since JBoss Cache 3.x.

-Memory Cache:

JBoss cache support LRU, LFU, MRU, Expiration, ElementSize and FIFO

JBoss Check List:

Performance in action:

Ok now it is show time for this performance testing programmer 1 used 3 different test cases which are as follow:

- Test Case from JCS site (applied on all caching frameworks)

- JBoss Cache benchmark framework (which is really a very nice cache benchmark framework)

- Test Case from Cache4J site (applied on all caching frameworks)

In the 1st and 3rd cache test case it just simple testing of retrieve and populating the cache, while in JBoss cache benchmark there are a lot of test cases shipped with the benchmark from replication to distributed and clustering testing.

All the testing here were performed on a single machine (no distributed testing were performed) and all the testing were performed in memory.

The versions of the frameworks we are going to test now are as follow:

OSCache: 2.4.1

Ehcache: 1.6.0

JCS: 1.3

Cache4j: 0.4

JBoss: 3.0.0

Whirly: 1.0.1

Swarm: 1.0

ShiftOne: 2.0b

JBoss cache benchmark:

We can see here that there is nearly 8 million get operation invoked on the different cache frameworks and the WhirlyCache took the smallest amount of time (followed by JBoss Cache) while OSCache took the biggest time.

we see here that there is nearly 2 million put operation invoked on the different cache frameworks and WhirlyCache took the smallest time while OSCache took the biggest time

The cache test performed here was in memory cache and there were 25 threads accessing the cache.

JCS Test Case:

Cache4j vs. JBoss EhCache vs. JBoss JCS vs. JBoss

OSCache vs. JBoss ShiftOne vs. cache4J Shiftone vs. EhCache

ShiftOne vs. JCS ShiftOne vs. OSCache ShiftOne vs. Swarm

ShiftOne vs.JBoss Swarm vs. Cache4J Swarm vs. EHCache

Swarm vs. Jboss Swarm vs. JCS Swarm vs. OSCache

Whirly vs. Cache4J Whirly vs. EhCache Whirly vs. JBoss

Whirly vs. JCS Whirly vs. OScache Whirly vs. ShiftOne

Whirly vs. Swarm

The winner in this test in Ehcache which achieved outstanding results against all the other frameworks, in 2nd place comes Whirly Cache and in 3rd place comes JBoss cache

Cache4j Test Case:

As we can see the SwarmCache took the biggest time while ehcache and whirlyCache took the smallest time.

This test also performs addition and retrieving for cache items which means there is no cache miss (like the test cases in JBoss cache benchmark)

But there is an extra step this test do which is removing cache entries from cache and if we omitted this operation (just concentrated on the put and get operation) we will get the following results

As we can see the JBoss and Swarm time is heavily reduced, this mean that the remove operation takes a lot of time in these two cache frameworks, but lets not forget that JBoss is not a flat cache (a structure cache) which might be the reason for the delay and also it uses transaction like mechanism for caching which would affect also its performance but still great feature (and for sure we wont invoke remove method so often)

And the gold medal goes to!

Our candidate frameworks in this part are WhirlyCache and JBoss cache both of them are achieved very good performance in the cache hit and miss but let's not forget that Whirly is not distributed cache which is a bad thing , beside that JBoss offers structure cache as we discussed before beside the transaction mechanism that is offered by it also , WhirlyCache is really nice for in memory cache either in single or multi threaded application on the contrary Swarm cache performance is really bad in multi threading application , it throw out of memory exception more than once while it is being tested .

Second place goes to ShiftOne which is really nice but suffer from lake of support ,documentation and even configuration.

If we considered the caching we introduced in the previous part we would have the following order:

First place: EhCache (still the best) along with Whirly and JBoss

Second place: ShiftOne and JCS

Third place: Cache4J and OSCache

The worst performance was achieved by Swarm cache (I guess It would be fast not to cache you objects than caching it with Swarm cache :D )

Conclusion:

In this part we have seen the comparison of different Open source cache frameworks we and concluded that EhCache is considered one of the bets choices (beside JBoss and Whirly cache) while Swarm is one of the poorest choice you will ever make.

Read more!